Now that the press flurry over the NTSB's report on the Autonomous

Driving System (ADS) fatality in Tempe has subsided, it's important to reflect

on the lessons to be learned. Hats off to the NTSB for absolutely nailing this.

Cheers to the Press who got the messaging right. But not everyone did. The goal

of this essay is to help focus on the right lessons to learn, clarify publicly

stated misconceptions, and emphasize the most important take-aways.

I encourage everyone in the AV industry to watch the first 5

and a half minutes of the NTSB board meeting video

( Youtube: Link // NTSB Link).

Safety leadership should watch the whole thing. Probably twice. Then present a

summary at your company's lunch & learn.

Pay particular attention to this part from

Chairman Sumwalt: "If your company

tests automated driving systems on public roads, this crash -- it was about

you. If you use roads where automated

driving systems were being tested, this crash -- it was about you."

I live in Pittsburgh and these public road tests happen near

my place of work and my home. I take the lessons from this crash personally. In principle, every

time I cross a street I'm potentially placed at risk by any company that might

be cutting corners on safety. (I hope that's none. All the companies testing

here have voluntarily submitted compliance reports for the well-drafted PennDOT

testing guidelines. But not every state has those, and those guidelines were

developed largely in response to the fatality we’re discussing.)

I also have long time friends who have invested their

careers in this technology. They have brought a vibrant and promising industry

to Pittsburgh and other cities. Negative

publicity resulting from a major mishap can threaten the jobs of those employed

by those companies.

So it is essential for all of us to get safety right.

The first step: for anyone in charge of testing who doesn't know

what a Safety Management System (SMS) is: (A) Watch that NSTB hearing intro.

(B) Pause testing on public roads until your company makes a good start down

that path. (Again, the PennDOT guidelines are a reasonable first step accepted

by a number of companies.

LINK)

You’ll sleep better having dramatically

improved your company’s safety culture before anyone gets hurt unnecessarily.

Clearing up some misconceptions

I’ve seen

some articles and commentary that missed the point of all of this.

Large segments of coverage emphasized technical shortcomings of the system - That's

not the point. Other coverage highlighted test driver distraction - That's

not the point either. Some blame that it was too dark, which is simply untrue -- NTSB estimates the pedestrian was visible at the full line of sight range of 637 feet. The fatal

mishap involved technical shortcomings, and the test driver was not paying

adequate attention. Both contributed to the mishap, and both were bad things.

But the lesson to

learn is that solid safety culture is without a doubt necessary to prevent

avoidable fatalities like these. That is the Point.

To make the most of this teachable moment let's break things

down further. These discussions are not really about the particular test

platform that was involved. The NTSB report gave that company credit for

significant improvement. Rather, the objective is to make sure everyone is

focused on ensuring they have learned the most important lesson so we don’t

suffer another avoidable ADS testing fatality.

A self-driving car

killed someone - NOT THE POINT

This was not a self-driving car. It was a test platform for

Automated Driving System (ADS) technology. The difference is night and day. Any argument that this vehicle was safe to

operate on public roads hinged on a human driver not only taking complete

responsibility for operational safety, but also being able to intervene when

the test vehicle inevitably made a mistake. It's not a fully automated

self-driving car if a driver is required to hover with hands above the steering

wheel and foot above the brake pedal the entire time the vehicle is operating.

It's a test vehicle. The correct statement is: a test

vehicle for developing ADS technology killed someone.

The pedestrian was initially

said to jump out of the dark in front of the car - NOT THE POINT

I still hear this sometimes based on the initial video clip

that was released immediately after the mishap. The pedestrian walked across

almost 4 lanes of road in view of the test vehicle before being struck, with sufficient lighting to have been seen by the driver. The

test vehicle detected the pedestrian 5.6 seconds before the crash. That was

plenty of time to avoid the crash, and plenty of time to track the pedestrian

crossing the street to predict that a crash would occur. Attempting to claim

that this crash was unavoidable is incorrect, and won't prevent the next ADS

testing fatality.

It's the pedestrian's

fault for jaywalking - NOT THE POINT

Jaywalking is what people do when it is 125 yards to the

nearest intersection and there is a paved walkway on the median. Even if there

is a sign saying not to cross. Tearing

up the paved walkway might help a little on this particular stretch of road,

but that's not going to prevent jaywalking as a potential cause of the next ADS

testing fatality.

Victim's apparent

drug use - NOT THE POINT

It was unlikely that the victim was a fully functional,

alert pedestrian. But much of the population isn't in this category for other

reasons. Children, distracted walkers, and others with less than perfect

capabilities and attention cross the street every day, and we expect drivers to

do their best to avoid hitting them.

There is no indication that the victim’s medical condition substantively

caused the fatality. (We're back to the fact that the pedestrian did not jump

in front of the car.) It would be unreasonable to insist that the public has the

responsibility to be fully alert and ready to jump out of the way of an errant ADS

test platform at all times they are outside their homes.

Tracking and

classification failure - NOT THE POINT

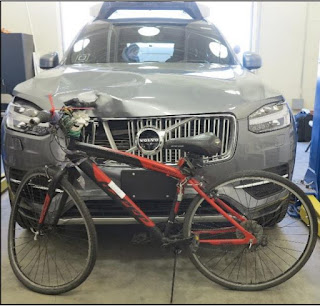

The ADS system on the test vehicle suffered some technical

issues that prevented predicting where the pedestrian would be when the test

vehicle got there, or even recognizing the object it was sensing was a

pedestrian walking a bicycle. However, the point of operating the test vehicle was

to find and fix defects.

Defects were expected, and should be expected on other ADS test

vehicles. That's why there is a human safety driver. Forbidding public road

testing of imperfect ADS systems basically outlaws road testing at this stage.

Blaming the technology won't prevent the next ADS testing fatality, but it

could hurt the industry for no reason.

It's the technology's

fault for ignoring jaywalkers - NOT THE POINT

This idea has been circulating, but apparently this isn't

quite true. Jaywalkers aren't ignored, but rather according to the information

presented by the NTSB a pedestrian isn't expected to cross the street at first.

Once the pedestrian moves for a while a track is built up that could indicate

street crossing, but until then movement into the street is considered

unexpected if the pedestrian is not at a designated crossing location. A deployment-ready

ADS could potentially use a more sophisticated approach to predict when a

pedestrian would enter the roadway.

Regardless of implementation, this did not contribute to the

fatality because the system never actually classified the victim as a

pedestrian. Again, improving this or other ADS technical features won't prevent

the next ADS testing fatality. That’s because testing safety is about the

safety driver, not which ADS prototype functions happen to be active on any

particular test run.

ADS emergency braking

behavior - NOT THE POINT

The ADS emergency braking function had behaviors that could hinder

its ability to provide backup support to the safety driver. Perhaps another

design could have done better for this particular mishap. However, it wasn't

the job of the ADS emergency braking to avoid hitting a pedestrian. That was

the safety driver's job. Improving ADS emergency braking capabilities might

reduce the probability of an ADS testing fatality, but won't entirely prevent the

next fatality from happening sooner than it should.

Native emergency

braking disabled - NOT THE POINT

It looks bad to have disabled the built-in emergency braking

system on the passenger vehicle used as the test platform. The purpose of such

systems is to help out after the driver makes a mistake. In this case there is

a good, but not perfect, chance it would have helped. But as with the ADS

emergency braking function, this simply improves the odds. Any safety expert is

going to say your odds are better with both belt and suspenders, but enabling

this function alone won't entirely prevent the next ADS testing fatality from

happening before it should.

Inattentive safety

driver - NOT THE POINT

There is no doubt that an inattentive safety driver is

dangerous when supervising an ADS test vehicle. And yet, driver complacency is

the expected outcome of asking a human to supervise an automated system that

works most of the time. That’s why it’s important to ensure that driver

monitoring is done continually and used to provide feedback. (In this case a

form of driver monitoring equipment was installed, but data was apparently not used

in a way that assured effective driver alertness.)

While enhanced training and stringent driver selection can

help, effective analysis and action taken upon monitoring data is required to

ensure that drivers are actually paying attention in practice. Simply firing

this driver without changing anything else won't prevent the next ADS testing

fatality from happening to some other driver who has slipped into bad operational

habits.

A fatality is regrettable, but human drivers killed about 100

people that same day with minimal news attention - NOT THE POINT

Some commentators point out the ratio of fatalities caused

by test vehicles vs. general automotive fatality rates. They then generally

argue that a few deaths in comparison to the ongoing carnage of regular cars is

a necessary and appropriate price to pay for progress. However, this argument

is not statistically valid.

Consider a reasonable goal that ADS testing (with highly

qualified, alert drivers presumed) should be no more dangerous than the risk

presented by normal cars. For normal US cars that's ballpark 500 million road

miles per pedestrian fatality. This includes mishaps caused by drunk,

distracted, and speeding drivers. Due to the far smaller number of miles being

driven by current test platform fleet sizes, the "budget" for fatal

accidents due to ADS road testing phase should, at this early stage, still be

zero.

The fatality somehow “proves”

that self-driving car technology isn't viable - NOT THE POINT

Some have tried to draw conclusions about the viability of

ADS technology from the fact that there was a testing fatality. However, the

issues with ADS technical performance only prove what we already knew. The

technology is still maturing, and a human needs to intervene to keep things

safe. This crash wasn't about the maturity of the technology; it was about

whether the ADS public road testing itself was safe.

Concentrating on technology maturity (for example, via

disclosing disengagement rates) serves to focus attention on a long term future

of system performance without a safety driver. But the long term isn’t what’s

at issue.

The more pressing issue is ensuring that the road testing

going on right now is sufficiently safe. At worst, continued use of

disengagement rates as the primary metric of ADS performance could hurt safety rather

than help. This is because disengagements, if gamed, could incentivize safety

drivers to take chances by avoiding disengagements in uncertain situations to

make the numbers look better. (Some companies no doubt have strategies to

mitigate this risk. But those are probably the companies with an SMS, which is

back to the point that matters.)

THE POINT: The safety

culture was broken

Safety culture issues were the enabler for this particular crash. Given the limited number of miles that

can be accumulated by any current test fleet, we should see no fatalities occur

during ADS testing. (Perhaps a truly unavoidable fatality will occur. This is

possible, but given the numbers it is unlikely if ADS testing is reasonably

safe. So our goal should be set to zero.) Safety culture is critical to ensure

this.

The NTSB rightly pushes hard for a safety management system

(SMS). But be careful to note that they simply say that this is a part of

safety culture, not all of it. Safety culture means, among other things, taking

responsibility for ensuring that their safety drivers are actually safe despite

the considerable difficulty of accomplishing that. Human safety drivers will

make mistakes, but a strong safety culture accounts for such mistakes in ensuring

overall safety.

It is important to note that the urgent point here is not

regulating self-driving car safety, but rather achieving safe ADS road testing.

They are (almost) two entirely different things. Testing safety is about

whether the company can consistently put an alert, able-to-react safety driver

on the road. On the other hand, ADS safety is about the technology. We need to

get to the technology safety part over time, but ADS road testing is the main

risk to manage right now.

Perhaps dealing with ADS safety would be easier if the

discussions of testing safety and deployment safety were more cleanly

separated.

THE TAKEAWAYS:

Chairman Sumwalt summed it up nicely in the intro. (You did

watch that 5 and half minute intro, right?)

But to make sure it hits home, this is my take:

One company's crash is every company's crash. You'll note I didn't name the company

involved, because really that's irrelevant to preventing there from being a

next fatality and the potential damage it could do to the industry’s reputation.

The bigger point is every company can and should

institute good safety culture before further fatalities take place if they

have not done so already. The NTSB credited the company at issue with

significant change in the right direction. But it only takes one company who hasn’t gotten

the message to be a problem for everyone. We can reasonably expect fatalities involving

ADS technology in the future even if these systems are many times safer than

human drivers. But there simply aren’t that many vehicles on the road yet for a

truly unavoidable mishap to be likely to occur. It’s far too early.

If your company is testing (or plans to test) autonomous

vehicles, get a Safety Management System in place before you do public road testing.

At least conform to the details in the PennDOT testing guidelines, even if

you’re not testing in Pennsylvania. If you are already testing on public roads

without an SMS, you should stand down until you get one in place.

Once you have an SMS, consider it a down-payment on a

continuing safety culture journey.

Prof. Philip Koopman, Carnegie Mellon University

Author Note: The

author and his company work with a variety of customers helping to improve

safety. He has been involved with self-driving car safety since the late 1990s.

These opinions are his own, and this piece was not sponsored.