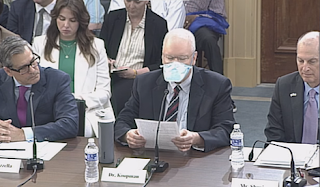

I recently had the privilege of testifying before the US House E&C committee on self-driving car safety. You can see the materials here:

A venue like this does not offer the best forum for nuance. In particular, one can make a precise statement for a reason and have that statement misunderstood (because there is limited time to explain), or misconstrued. The result can be talking past each other for reasons ranging from simple misunderstanding, to ideological differences, to the other side needing to show they are right regardless of the counter-arguments. I do not attempt to cover all topics here; just ones that feel like they could use some more discussion. (See my written testimony for the full list of topics.)

The venue also requires expressing opinions about the best path forward, which can legitimately have different views. I happen to believe that setting up a requirement to follow safety standards is our best bet to be competitive long term with international competitors (who end up having that same requirement). Others disagree.

In this blog I have the luxury of spending some time on some areas that could not be covered with as much nuance/detail in the official proceedings.

US House E&C Hearing on July 26, 2023

Claims that AVs are already safe are premature

Ultimately AVs will win or lose based on public trust. I believe that making overly aggressive claims about safety degrade that trust.

The AV companies are busy messaging that they have already proven are they are better than human drivers, and framing it as a discussion of fatality rates. In other words, they are declaring victory on public road safety in terms of reducing road fatalities. But the data analysis does not support that they are reducing fatalities, and it is still not really clear what the crash/injury rate outcomes are.

Their messaging amounts to 40,000 Americans die on roads every year. We have proven we are safer. Delaying us will kill people. (Where "us" is the AV industry.) (Cruise: "

Humans are terrible drivers" and computers "never drive distracted, drowsy, or drunk")

Cruise has also published some bar graphs of unclear meaning, because the baseline data and details are not public, and the bars selected tell only part of the story (e.g., "collision with meaningful risk of injury" instead of injuries when we know they have already had a multi-injury crash, which therefore undercounts injuries; and only collisions with "primary contribution" when we know they were partially at fault for that multi-injury crash, even if not at "primary" fault.) I have not seen Cruise explicitly say they have reduced fatalities (they say they "are on track to far exceed this projected safety benefit"), but the implied message of declaring victory on safety is quite clear from them ("our driverless AVs have outperformed the average human drier in San Francisco by a large margin.")

Other messaging might be based on reasonable data analysis that is extended to conclusions that go beyond the available data. Waymo: "

the Waymo Driver is already reducing traffic injuries and fatalities" -- where the fatality rate is an early estimate, and the serious injury rate numbers are small enough to still be in the data collection phase. Did I say their report is wrong? I did not. I said that the marketing claims being made are unsupported. If they claimed "our modeling projects we are reducing traffic injuries and shows us on track for reducing fatalities" then that might well be a reasonable claim. But it is not the claim they are making. I note their academic-style papers do a much more rigorous job of stating claims than their marketing material. So this is a matter of overly-aggressive marketing.

It is premature to declare victory. (Did I say the claim of reduced fatalities is definitely false? No. I said it is premature to make that claim. In other words, nobody knows how this will turn out.)

Waymo and Cruise have 1 to 3 million miles each without a driver. Mean time between human driver fatal crashes is ballpark 100 Million miles (details and nuances, but we know human drivers -- including the drunks -- can do this on US public roads in a good year). So at a few million miles there is insufficient experience to know how fatalities will actually turn out.

We are much further away from the data it will take to understand fatalities, which ranges from 300 million to 1 billion miles for a high statistical confidence. A single fatality by any AV company in the next year or so would likely prove that AVs are not safe enough, but we don't know if that will happen.

Missy Cummings has recent results that shows that Waymo has about 4x more non-fatal crashes on non-interstate roads than average human drivers (also on non-interstate roads) -- and Cruise has about 8x more. However, these crash rates are similar to Lyft and Uber in California. (There is actual research backing up that statement that will be published in due course.)

Also, even if one company shows it is safe enough, that does not automatically make other companies safe. We've already seen differences between Waymo (no injury crashes) and Cruise (a multi-injury crash). Whether that is just bad luck or representative still takes more data. Industry messaging that amounts to "

Waymo is safe therefore all AVs are safe" is also problematic, especially if it claims victory on fatality rates.

The reality is that both Waymo and Cruise are using statistical models of varying degrees of sophistication to predict their safety outcomes. Predictions can be wrong. In safety predictions often are wrong for companies with

poor safety cultures or who decide not to follow industry safety standards -- but we don't find out until the catastrophic failure makes the news. We can hope that won't happen here, but it is hope, not time for a victory dance.

Summary: Companies are predicting they will reduce fatalities. That is not the same as actually proving it. There is a long way to run here, and the only thing I am sure is there will be surprises. Perhaps in a year we'll have enough data to get some more clarity about property damage and injury crashes, but only for companies that want to be transparent about their data. It will be even longer to show that the fatality rate is on a par with human drivers. If bad news arrives, it will come sooner. We should not make policy assuming they are safer.

Blame and AV safety

Blaming someone does not improve safety if it deflects the need to make a safety improvement. In particular, saying that a crash was not the fault of an AV company is irrelevant to measuring and improving safety. Much of road safety comes not from being blameless, but rather for compensating for mistakes, infrastructure faults, and other hazards not one's own fault.

Any emphasis on metrics that emphasizes "but it was not mostly our fault" is about public relations, not about safety. I guess PR is fine for investors, but baking that into a safety management system means lost opportunities to improve safety.

That is not the behavior appropriate for any company who claims safety is their most important priority. If a company wants to publish both "crashes" and "at fault crashes" then I guess OK (although "at fault" should include partially at fault, not 49% at fault rounds down to 0% at fault). But publishing only "at fault" crashes is about publicity, not about safety transparency. (Even worse is lobbying that only "at fault" crashes should be reported in data collection.)

On the other hand, it is important to hold AV companies accountable for safety, just as we hold human drivers accountable.

A computer driver should have the same duty of care as a human driver on public roads. This is not formally the situation now, and this part of tort law will take a lot of cases to resolve, wasting a lot of time and resources. The manufacturer should be the responsible party for any negligent driving (i.e., driving behavior that would be negligent if a human driver were to do it) by their computer driver. Not the owner, and not the operator, because neither has the ability to design and validate the computer driver's behavior. This aspect of blame will use tort law in its primary role: to put pressure on the responsible party to avoid negligent driving behavior. The same rules should apply to human and computer drivers.

There is a nuanced issue regarding liability here. Companies seem to want to restrict their exposure to being only product liability, and evade tort law. However, if a computer driver runs a red light, that should be treated exactly as a human driver negligence situation. There should be no need to reverse engineer a huge neural network to prove a specific design defect (product liability) -- the fact of running a red light should be the basis for making a claim based on negligent behavior alone (tort law) without having the burden to prove a product defect. Product liability is more expensive and more difficult to pursue. The emphasis should be on using tort law when possible, and product liability only as a secondary path. That will keep costs down and make deserved compensation more accessible on the same basis it is for human driver negligence.

Also, aggressively blaming others rather than saying at the very least "we could have helped avoid this crash even if other driver is assigned blame" degrades trust.

Summary: Statistics that incorporate blame impair transparency. However, it is helpful for tort law to hold the manufacturers accountable for negligent behavior by computer drivers. And you would think computer drivers should have near-zero negligent driving rates? Insisting on product liability rather than tort law is a way for manufacturers to decrease their accountability for computer driver problems, harming the ability other road users to seek justified compensation if harmed.

Level 2/2+:

All this attention to AVs is distracting the discussion from a much bigger and more pressing economic and safety issue: auto-pilot systems and the like. The need to regulate those systems is much more urgent from a societal point of view. But it's not the discussion because the auto industry has already gotten itself a situation with no regulation other than a data reporting requirement and the occasional (

perhaps after many years) recall.

Driver monitoring effectiveness and designing a human/computer interaction approach that does not turn human drivers into moral crumple zones needs a lot more attention. It will take a long time for NHTSA to address this beyond doing recalls for the more egregious issues. Tort law (

holding the computer driver accountable when it is steering) seems the only viable way to put some guard rails in place in the near- to mid-term.

Opinion: Level 2/2+ is what matters for the car industry now for both safety and economic benefits. AVs are still a longer term bet.

Don't sell on safety:

Companies should not sell solving the 40K/year fatality problem. There are many other technologies that can make a much quicker difference in that area. And social change for that matter. If what we want is better road safety, investing tens of billions of dollars in robotaxi technology is one of the least economically efficient ways to do this. Instead we could improve active safety systems, encourage a switch to safer mass transit, press harder for social change on impaired/distracted driving, and so on. While one hopes this will long term help with fatalities, this is simply the wrong battle for the industry to try to fight with this technology for at least a decade. (Even if the perfect robotaxi were invented today -- which we are a long way from -- it would take many years to see a big drop in fatalities due to the time to turn over the automotive fleet that has an average age of about 12 years.)

Companies should sell on economic benefit, being better for cities, being better for consumers, transportation equity, and so on -- while not creating safety issues. Safety promises should simply indicate they are doing no harm. This is much easier to show, assuming it is true. And it does not set the industry up for collapse when the next (remember Uber ATG?) fatality eventually arrives.

The issue is that any statement about reducing fatalities is a prediction, not a conclusion. I would hope that car companies would not release a driverless car onto public roads unless they can predict it is safer than a human driver. They should disclose that argument in a transparent way. But it is a prediction, not a certainty. It will take years to prove. Why pick a fight that is so difficult when there is really no need to do so?

A smarter way to explain to the public how they are ensuring a safe and responsible release is to use an approach such as:

- Follow industry safety standards to set a reasonable expectation of safe deployment and publicly disclose independent conformance checks.

- Establish metrics that will be used to prove safety in advance (not cherry-picked after the fact).

- Transparent monthly reports of those metric outcome vs. goals

- Show that issues identified are resolved vs. continuing to scale up despite problems. Problems includes not only crashes, but also negative externalities on other road users

- Publish lessons learned in a generic way

- Show public benefit is being delivered beyond safety, again with periodic metric publications.

Three principles for safety, all of which are a problem with the industry's current adversarial approach to regulatory oversight, are:

- Transparency

- Accountability

- Independent oversight

It is not only that you need to do those things to actually get safety. It is also that these things build trust.

Other key points:

- Any person or organization who promotes the "human drivers are bad, so computers will be safe " and/or the "94% of crashes are caused by human error" talking points should be presumptively considered an unreliable source of information. At this point I feel those are propaganda points. Any organization saying that Safety is their #1 priority should know better.

- The main challenge to the industry is not regulations -- it is the ability to build reliable, safe vehicles that scale up in the face of the complexity of the real world. Expectations of exponential numbers of cars deploying any time soon seem unrealistic. The current industry city-by-city approach is likely to continue to grind away for years to come. Being realistic about this will avoid pressure to make overly aggressive deployments that compromise safety.

- In other industries (e.g., aviation, rail) following their own industry standards is an essential part of assuring safety. The car companies should be required to follow their standards too (e.g., ISO 26262, ISO 21448, UL 4600, ISO/SAE 21434, perhaps ISO TS 5083 when we find out what is in it). This varies across companies, with some companies being very clearly against following those standards.

- There is already a regulatory framework, written by the previous administration. This gives us an existing process with an existing potential bipartisan starting point to move the discussion forward instead of starting from scratch with rule making. That framework includes a significant shift in government policy to require the industry to follow its own consensus safety standards. My understanding is that US Government policy is to use such standards whenever feasible. It is time for US DOT to get with the program here (as they proposed to do several years ago -- but stalled ever since).

- Absolute municipal and state preemption are a problem, especially for "performance" aspects of a computer driver:

- This leaves states and localities prevented from protecting their constituents (if they choose to do so) while the Federal Government is still working on AV regulations

- Even after there are federal regulations, state and local governments need to be able to create and enforce traffic laws, rules of the road, and hold computer drivers accountable (e.g., issue and revoke licenses based on factors such as computer driver negligence)

- In the end, the Federal Government should regulate the ability of equipment to follow whatever road rules are in place. States and localities should be able to set behavioral rules for road use and enforce compliance for computer drivers without the Federal Government subsuming that traditional State/Local ability to adapt traffic rules to local conditions.

- Do you remember how ride hail networks were supposed to solve the transportation equity problem? Didn't really happen, did it? Forced arbitration was a part of that outcome, especially for the disabled. We need to make sure that the AV story has a better ending by avoiding forced arbitration being imposed on road users. It is even possible that taking one ride hail ride might force you into arbitration if you are later hurt as a pedestrian by a car from that company (depends on the contract language -- the one you clicked without really reading or understanding even if you did read it). Other aspects of equity matter too, such as equity in exposing vulnerable populations to the risks of public road testing.

- There are numerous other points summarized after the end of my written narrative that also matter, covering safety technology, jobs/economic impact, liability, data reporting, regulating safety, avoiding complete preemption, and debunking industry-promoted myths.

- There is a Q&A at the end of my testimony where I have the time to give more robust answers to some of the questions I was asked, and more.

Last update 7/27/2023