Don’t forget that there will always be something in the world you’ve never seen before and have never trained on, but your self driving car is going to have to deal with it. A particular area of concern is correlated failures across sensing modes.

Perception safety metrics deal with how a self driving car takes sensor inputs and maps them into a real-time model of the world around it.

Perception metrics should deal with a number of areas. One area is sensor performance. This is not absolute performance, but rather with respect to safety requirements. Can a sensor see far enough ahead to give accurate perception in time for the planner to react? Does the accuracy remain sufficient given changes in environmental and operational conditions? Note that for the needs of the planner, further isn’t better without limit. At some point, you can see far enough ahead that you’ve reached the planning horizon, and sensor performance beyond that might help with ride comfort or efficiency but is not necessarily directly related to safety.

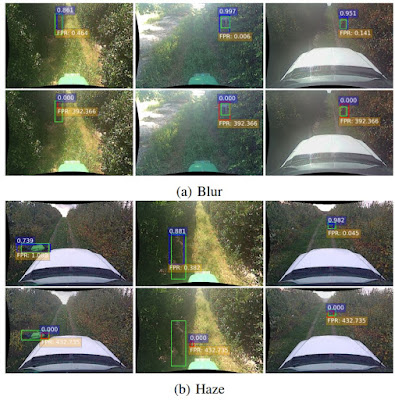

Another type of metric deals with sensor fusion. At a high level, success with sensor fusion is whether that fusion strategy can actually detect the types of things you need to see in the environment. But even if it seems like sensor fusion is seeing everything it needs to, there are some underlying safety issues to consider.

One is measuring correlated failures. Suppose your sensor fusion algorithm assumes that multiple sensors have independent failures. So you’ve done some math and said, well, the chance of all the sensors failing at the same time as low enough to tolerate, that analysis assumes there’s some independence across the sensor failures.

For example, if you have three sensors and you’re assuming that they fail independently, knowing that two of those sensors failed at the same time on the same thing is really important because it provides counter-evidence to your independence assumption. But you need to be looking for this specifically because your vehicle may have performed just fine because the third sensor was independent. So the important thing here is the metric is not about whether your sensor fusion work but rather whether the independence assumption behind your analysis was valid or invalid.

Another metric to consider related to the area of sensor fusion is whether or not detection ride-through based on tracking is covering up problems. It’s easy enough to rationalize that if you see something nine frames out of 10, then missing one frame isn’t a big deal because you can track through the dropout. If missed detections are infrequent and random, that might be valid assumption. But it’s also possible you have clusters of missed detections based on some types of environments or some types of objects related to certain types of sensors even if overall they are a small fraction. Keeping track of how often and how long ride through is actually required to track through missing detections is important to validate the underlying assumption of random dropouts rather than clustered or correlated dropouts.

A third type of metric is classification accuracy. It’s common to track false negatives, which are how often you miss something that matters. For example, if you miss a pedestrian, it’s hard to avoid hitting something you don’t see. But you should track false negatives not just based on the sensor fusion output, but also per sensor and per combinations of sensors. This goes back to making sure there aren’t systematic faults that undermine the independence of failure assumptions.

There are also false positives, which is how often you see something there that isn’t really there. For example, a pattern of cracks in the pavement might look like an obstacle and could cause a panic stop. Again, sensor fusion might be masking a lot of false positives. But you need to know whether or not your independence assumption for deciding how the sensors fail as a system is valid or not.

Somewhere in between is misclassifications. For example, saying something is a bicycle versus a wheelchair versus a pedestrian is likely to matter for prediction, even though all three of those things are an object that shouldn’t be hit.

Just touching on the independence point one more time, all these metrics: false plate negatives, false positive, and misclassifications, should be per sensor modality. That’s because if sensor fusion saves you, say, for example, vision misclassifies something but later still gets it right, you can’t count on that always working. You want to make sure that each of your sensor modalities works as well as it can without systematic defects, because maybe next time you won’t get lucky and the sensor fusion algorithm will suffer correlated fault that leads to a problem.

In all the different aspects of perception, edge cases matter. There are going to be things you haven’t seen before and you can’t train on something you’ve never seen.

So how well does your sensing system generalize? There are very likely to be systematic biases in training and validation data that never occurred to anyone to notice. An example we’ve seen is that if you take data in cool weather, nobody’s wearing shorts outdoors in the Northeast US. Therefore, the system learns implicitly that tan or brown things sticking out of the ground with green blobs on top are bushes or trees. But in the summer that might be someone in shorts wearing a green shirt.

You also have to think about unusual presentations of known objects. For example, a person carrying a bicycle is different than a bicycle carrying a person. Or maybe someone’s fallen down into the roadway. Or maybe you see very strange vehicle configurations or weird paint jobs on vehicles.

The thing to look for in all these is clusters or correlations in perception failures -- things that don’t support a random independent failure assumption between modes. Because those are the places where you’re going to have trouble with sensor fusion sorting out the mess and compensating for failures.

A big challenge in perception is that the world is an essentially infinite supply of edge cases. It’s advisable to have a robust taxonomy of objects you expect to see in your operational design domain, especially to the degree that prediction, which we’ll discuss later on, requires accurate classification of objects or maybe even object subtypes.

While it’s useful to have a metric that deals with coverage of the taxonomy in training and testing, it’s just as important to have a metric for how well the taxonomy actually represents the operational design domain. Along those lines, a metric that might be interesting is how often you encounter something that’s not in the taxonomy, because if that’s happening every minute or every hour, that tells you your taxonomy probably needs more maturity before you deploy.

Because the world is open-ended, a metric is also useful for how often your perception is saying: "I’m not sure what that is." Now, it’s okay to handle "I’m not sure" by doing a safety shutdown or doing something safe. But knowing how often your perception is confused or has a hole is an important way to measure your perception maturity.

Summing up, perception metrics, as we’ve discussed them, cover a broad swath from sensors through sensor fusion to object classification. In practice, these might be split out to different types of metrics, but they have to be covered somewhere. And during this discussion we’ve seen that they do interact a bit.

The most important outcome of these metrics is to get a feel for how well the system is able to build a model of the outside world, given that sensors are imperfect, operational conditions can compromise sensor capabilities, and the real world can present objects and environmental conditions that both have never been seen, and worse, might cause correlated sensor failures that compromise the ability of sensor fusion to actually come up with an accurate classification of specific types of objects. Don’t forget, there will always be something in the world you’ve never seen before and have never trained on, but your self driving car is going to have to deal with it.