A Cruise robotaxi failed to yield effectively to a fire truck, delaying it.

Sub-headline: Garbage truck driver saves the day when Cruise autonomous vehicle proves itself to not be autonomous.

This referenced article explains the incident in detail, which involves a garbage truck blocking one lane and the Cruise vehicle pulling over into a position that did not leave enough room for the fire truck to pass. But it also argues that things like this should be excused because it is in the cause of developing life saving technology. I have to disagree. Real harm done now to real people should not be balanced against theoretical harm potentially saved in the future. Especially when there is no reason (other than business incentives) to be doing the harm today, and the deployment continues once it is obvious that near-term harm is likely.

I would say that if the car can't drive in the city like a human driver, it should have a human driver to take over when the car can't. Whatever remote system Cruise has is clearly inadequate, because we've seen three problems recently (the article mentions them; an important one is driving with headlights off at night and Cruise's reaction to that incident). The article attributes the root cause of this incident to Cruise not having worked through all interactions with emergency vehicles, which is a reasonable analysis as far as it goes. But why are they operating in a major city with half-baked emergency vehicle interaction?

This time no major harm was done because the garbage truck driver was able to move that vehicle instead. (Realize that garbage trucks stopped in-lane with no driver in the vehicle is business as usual for them, as a human driver would know.) The fire did in fact cause property damage and injuries, so things could have been a lot worse due to a longer delay if not for a quick-acting truck driver. (Who by the way has my admiration for acting quickly and decisively.) What if the garbage truck had been a disabled vehicle or a the truck had been in the middle of an operation it could not be moved during? Then the fire truck would have been stuck. The article says the situation was complex, but driving in the real world is complex. I've personally been in situations where I needed to do something unconventional to let an emergency vehicle pass. A competent human driver understands the situation and acts. Yep, it's complex. If you can't handle complex, don't get on the road without a human backup driver.

The safety driver should not be removed until the vehicle can 100% conform to safety relevant traffic laws and practical resolution of related situations such as this. "Testing" without a human safety driver when the vehicle isn't safe is not testing -- it's just plain irresponsible. There is no technical reason preventing Cruise from keeping a safety driver in their vehicles while they continue testing. Doing so wouldn't delay the technology development in the slightest -- if what they care about is safety. If the safety driver literally has to do nothing, you've still done your testing and your multi-billion dollar company is out a few bucks for safety driver wages. If safety is truly #1, why would you choose to cut costs and remove that safety driver if you know your system isn't at 100% safe yet? Removing the safety driver is pure theater playing to public opinion and, one assumes, investors.

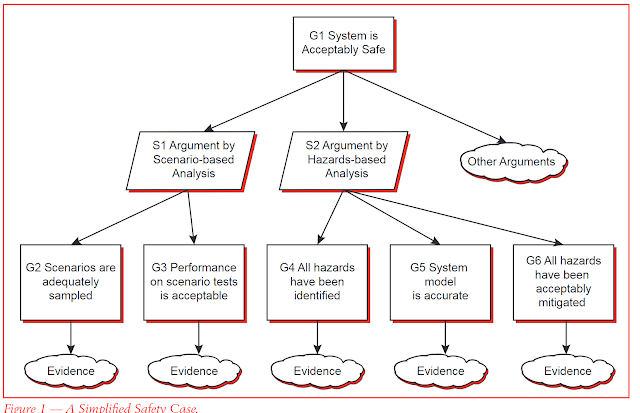

Cruise says that they apply a Safety Management System (SMS) "rigorously across the company." A main point of an SMS is to recognize operational hazards and alter operations in response to discovered hazards. In this case, it is clear that interaction with emergency vehicles requires more sophistication and presents a public safety hazard as currently implemented. Safety drivers should go back into the vehicles until they fix all such issues (not just this one particular interaction scenario) and their vehicle can really drive safely. Unless they simply decide that letting fire trucks on the way to a burning building pass is low priority for them.

Cruise is lucky the delayed fire truck arrival was not attributable to a death -- this time. This incident happened at 4 AM, which shows even in a nearly empty city you need to have a very sophisticated driver to avoid safety issues. At the very least they should halt no-human-driver operations until they can attest that they can handle every possible emergency vehicle interaction without causing more delay to the emergency vehicle than a proficient human driver, including situations in which a human driver would normally get creative to allow emergency vehicle progress. City officials wrote in a filing to the California Public Utilities Commission: "This incident slowed SFFD response to a fire that resulted in property damage and personal injuries,” and were concerned that frequent in-lane stops by Cruise vehicles could have a "negative impact" on fire department response times. Every safety related incident needs to be addressed. It is a golden opportunity to improve before you get unlucky. Cruise says they have a "rigorous" SMS, but I'm not seeing it. Will Cruise learn? Or will they keep rolling dice without safety drivers? Cruise shouldn't wait for something worse to happen before getting the message that they need to do better if they want to operate without a safety driver.