As the self-driving car industry works to create safer vehicles, it is facing a significant regulatory challenge. Complying with existing Federal Motor Vehicle Safety Standards (FMVSS) can be difficult or impossible for advanced designs. For conventional vehicles the FMVSS structure helps ensure a basic level of safety by testing some key safety capabilities. However, it might be impossible to run these tests on advanced self-driving cars that lack a brake pedal, steering wheel, or other components required by test procedures.

While there is industry pressure to waive some FMVSS requirements in the name of hastening progress, doing so is likely to result in safety problems. I’ll explain a way out of this dilemma based on the established technique of using safety cases. In brief, auto makers should create an evidence-based explanation as to why they achieve the intended safety goals of current FMVSS regulations even if they can’t perform the tests as written. This does not require disclosure of proprietary autonomous vehicle technology, and does not require waiting for the government to design new safety test procedures.

Why the Current FMVSS Structure Must Change

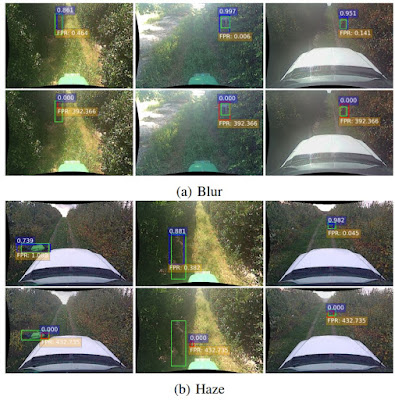

Consider an example of FMVSS 138, which relates to tire pressure monitoring. At some point many readers have seen a tire pressure telltale light, warning of low tire pressure:

FMVSS 138 Low Tire Pressure Telltale

This light exists because of FMVSS, which specifies tests to make sure that a driver-visible telltale light turns on for under-inflation and blow-out conditions with specified road surface conditions, vehicle speed, and so on.

But what if an unmanned vehicle doesn’t have a driver seat? Or even a dashboard for mounting the telltale? Should we wait years for the government to develop an alternate self-driving car FMVSS series? Or should we simply waive FMVSS compliance when the tests don’t make sense as written?

Simplistic, blanket waivers are a bad idea. It is said that safety standards such as FMVSS are written in the blood of past victims. Self-driving cars are supposed to improve safety. We shouldn’t grant FMVSS waivers that will result in having more blood spilled to re-learn well understood lessons for self-driving cars.

The weakness of the FMVSS approach is that the tests don’t explicitly capture the “why” of the safety standard. Rather, there is a very prescriptive set of rules, operating in a manner similar to building codes for houses. Like building codes, they can take time to update when new technology appears. But just as it is a bad idea to skip a building inspection on your new house, you shouldn’t let vehicle makers skip FMVSS tests for your new car – self-driving or otherwise. Despite the fear of hindering progress, something must be done to adapt the FMVSS framework to self-driving cars.

A Safety Case Approach to FMVSS

A way to permit rapid progress while still ensuring that we don’t lose ground on basic vehicle safety is to adopt a safety case approach. A safety case is a written explanation of why a system is appropriately safe. Safety cases include: a safety goal, a strategy for meeting the goal, and evidence that the strategy actually works.

To create an FMVSS 138 safety case, a self-driving car maker would first need to identify the safety goals behind that standard. A number of public documents that precede FMVSS 138 state safety goals of detecting low tire pressure and avoiding blowouts. Those goals were, in turn, motivated by dozens of deaths resulting from tire blowouts that provoked the 2000 TREAD act.

The next step is for the vehicle maker to propose a safety strategy compatible with its product. For example, vehicle software might set internal speed and distance limits in response to a tire failure, or simply pull off the road to await service. The safety case would also propose tests to provide concrete evidence that the safety strategy is effective. For example, instead of demonstrating that a telltale light illuminates, the test might instead show that the vehicle pulls to the side of the road within a certain timeframe when low tire pressure is detected. There is considerable flexibility in safety strategy and evidence so long as the safety goal is adequately met.

Regulators will need a process for documenting the safety case for each requested FMVSS deviation. They must decide whether they should evaluate safety cases up front or employ less direct feedback approaches such as post-mishap litigation. Regardless of approach, the safety cases can be made public, because they will describe a way to test vehicles for basic safety, and not the inner workings of highly proprietary autonomy algorithms.

Implementing this approach only requires vehicle makers to do extra work for FMVSS deviations that provide their products with a competitive advantage. Over time, it is likely that a set of standardized industry approaches for typical vehicle designs will emerge, reducing the effort involved. And if an FMVSS requirement is truly irrelevant, a safety case can explain why.

While there is much more to self-driving car safety than FMVSS compliance, we should not be moving backward by abandoning accepted vehicle safety requirements. Instead, a safety case approach will enable self-driving car makers to innovate as rapidly as they like, with a pay-as-you-go burden to justify why their alternative approaches to providing existing safety capabilities are adequate.

Author info: Prof. Koopman has been helping government, commercial, and academic self-driving developers improve safety for 20 years.

Contact: koopman@cmu.edu

Originally published in The Hill 6/30/2018:

http://thehill.com/opinion/technology/394945-how-to-keep-self-driving-cars-safe-when-no-one-is-watching-for-dashboard