I'm very pleased to share a publication from our NREC autonomy validation team that explains how computationally cheap image perturbations and degradations can expose catastrophic perception brittleness issues. You don't need adversarial attacks to foil machine learning-based perception -- straightforward image degradations such as blur or haze can cause problems too.

Our paper "Putting image manipulations in context: robustness testing for safe perception" will be presented at IEEE SSRR August 6-8. Here's a submission preprint:

https://users.ece.cmu.edu/~koopman/pubs/pezzementi18_perception_robustness_testing.pdf

Abstract—We introduce a method to evaluate the robustness of perception systems to the wide variety of conditions that a deployed system will encounter. Using person detection as a sample safety-critical application, we evaluate the robustness of several state-of-the-art perception systems to a variety of common image perturbations and degradations. We introduce two novel image perturbations that use “contextual information” (in the form of stereo image data) to perform more physically-realistic simulation of haze and defocus effects. For both standard and contextual mutations, we show cases where performance drops catastrophically in response to barely perceptible

changes. We also show how robustness to contextual mutators can be predicted without the associated contextual information in some cases.

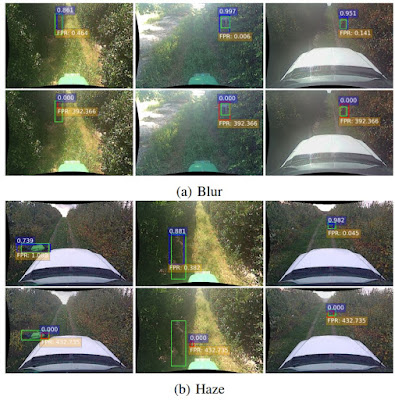

Fig. 6: Examples of images that show the largest change

in detection performance for MS-CNN under moderate blur

and haze. For all of them, the rate of FPs per image required

to detect the person increases by three to five orders of

magnitude. In each image, the green box shows the labeled

location of the person. The blue and red boxes are the

detection produced by the SUT before and after mutation

respectively, and the white-on-blue text is the strength of

that detection (ranged 0 to 1). Finally, the value in whiteon-yellow

text shows the average FP rate per image that a

sensitivity threshold set at that value would yield. i.e., that

is the required FP rate to still detect the person.

Alternate slide download link: https://users.ece.cmu.edu/~koopman/pubs/pezzementi18_perception_robustness_testing_slides.pdf

Citation:

Pezzementi, Z., Tabor, T., Yim, S., Chang, J., Drozd, B., Guttendorf, D., Wagner, M., & Koopman, P., "Putting image manipulations in context: robustness testing for safe perception," IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Aug. 2018.

No comments:

Post a Comment

All comments are moderated by a human. While it is always nice to see "I like this" comments, only comments that contribute substantively to the discussion will be approved for posting.