These are the slides I presented at the AAMVA International Conference, August 22, 2018 in Philadelphia PA.

It's an update of my PennDOT AV Summit presentation from earlier this year. A key takeaway is that the lesson that we should be learning from the tragic Uber fatality in Tempe AZ earlier this year is:

- Do NOT blame the victim

- Do NOT blame the technology

- Do NOT blame the driver

INSTEAD -- figure out how to make sure the safety driver is actually engaged even during long, monotonous road testing campaigns. AND actually measure driver engagement so problems can be fixed before there is another avoidable testing fatality.

Even better is to use simulation to minimize the need for road testing, but given that testers are out on the road operating, there needs to be credible safety argument that they will be no more dangerous than other conventional vehicles while operating on public roads.

Wednesday, August 22, 2018

Tuesday, August 14, 2018

ADAS Code of Practice

One of the speakers at AVS last month mentioned that there was a Code of Practice for ADAS design (basically, level 1 and level 2 autonomy). And that there is a proposal to update it over the next few years for higher autonomy levels.

A written set of uniform practices is generally worth something worth looking into, so I took a look here:

https://www.acea.be/uploads/publications/20090831_Code_of_Practice_ADAS.pdf

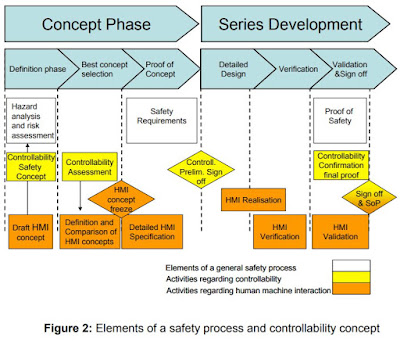

The main report sets forth a development process with a significant emphasis on controllability. That makes sense, because for ADAS typically the safety argument ultimately ends up being that the driver will be responsible for safety, and that requires an ability for the driver to assert ultimate control over a potentially malfunctioning system.

The part that I actually found more interesting in many respects was the set of Annexes, which include quite a number of checklists for controllability evaluation, safety analysis, and assessment methods as well as Human-Machine Interface concept selection.

I'd expect that this is a useful starting point for those working on higher levels of autonomy, and most critically anyone trying to take on the very difficult human/machine issues involved with level 2 and level 3 systems. (Whether it is sufficient on its own is not something I can say at this point, but starting with something like this is usually better than a cold start.)

If you have any thoughts about this document please let me know via a comment.

A written set of uniform practices is generally worth something worth looking into, so I took a look here:

https://www.acea.be/uploads/publications/20090831_Code_of_Practice_ADAS.pdf

The part that I actually found more interesting in many respects was the set of Annexes, which include quite a number of checklists for controllability evaluation, safety analysis, and assessment methods as well as Human-Machine Interface concept selection.

I'd expect that this is a useful starting point for those working on higher levels of autonomy, and most critically anyone trying to take on the very difficult human/machine issues involved with level 2 and level 3 systems. (Whether it is sufficient on its own is not something I can say at this point, but starting with something like this is usually better than a cold start.)

If you have any thoughts about this document please let me know via a comment.

Monday, August 6, 2018

The Case for Lower Speed Autonomous Vehicle On-Road Testing

Every once in a while I hear about a self-driving car test or deployment program that plans to operate at lower speeds (for example, under 25 mph) to lower risk. Intuitively that sounds good, but I thought it would be interesting to dig deeper and see what turns up.

There have been a few research projects over the years looking into the probability of a fatality when a conventionally driven car impacts a pedestrian. As you might expect, faster impact speeds increase fatalities. But it's not linear -- it's an S-shape curve. And that matters a lot:

Notes for those who like details:

There is certainly room for reasonable safety arguments at speeds above 20 mph. I'm just pointing out that testing time spent at/below 20 mph is inherently less risky if a pedestrian collision does occur. So maximizing the exposure to high speed operation is a way to improve overall safety in the event a pedestrian impact does occur.

The impact speed is potentially different than vehicle speed. If the vehicle has time to shed even 5 or 10 mph of speed at the last second before impact that certainly helps, potentially a lot, even if the vehicle does not come to a complete stop before impact. But a slower vehicle is less dependent upon that last second braking (human or automated) working properly in a crisis.

The actual risk will depend upon circumstances. For example, since the 1991 data shown it seems likely that emergency medical services have improved, reducing fatality rates. On the other hand, increasing prevalence of SUVs might increase fatality rates depending upon impact geometries. And so on. A study that compares multiple data sets is here:

https://nacto.org/docs/usdg/relationship_between_speed_risk_fatal_injury_pedestrians_and_car_occupants_richards.pdf

But, all that aside, all the data I've seen shows that traditional city speed limits (25 mph or less) help with reducing pedestrian fatalities.

There have been a few research projects over the years looking into the probability of a fatality when a conventionally driven car impacts a pedestrian. As you might expect, faster impact speeds increase fatalities. But it's not linear -- it's an S-shape curve. And that matters a lot:

(Source: WHO http://bit.ly/2uzRfSI )

Looking at this data (and other similar data), impacts at less than 20 miles an hour have a flat curve near zero, and are comparatively survivable. Above 30 mph or so is a significantly bigger problem on a per-incident basis. Hmm, maybe the city planners who set 25 mph speed limits have a valid point! (And surely they must have known this already.) In conventional vehicles the flat curve at and below 20 has lead to campaigns to nudge urban speed limits lower, with slogans such as "20 is plenty."

For on-road autonomous vehicle testing there's a message here. Low speed testing and deployment carries dramatically less risk of a fatality. The risk of a fatality goes up dramatically as speed increases beyond that.

For systems with a more complex "above 25 mph" strategy there still ought to be plenty that is either reused from the slow system or able to be validated at low speeds. Yes, slow is different than fast due to the physics of kinetic energy. But a strategy that validates as much as possible below 25 mph and then reuses significant amounts of that validation evidence as a foundation for higher speed validation could present less risk to the public. For example, if you can't tell the difference between a person riding a bike and a person walking next to a bike at 25 mph, you're going to have worse problems at 45 mph. (You might say "but that's not how we do it." My point is maybe the AV industry should be optimizing for validation, and this is the way it should get done.)

It's clear that many companies are on a "race" to autonomy. But sometimes slow and steady can win the race. Slow speed runs might be less flashy, but until the technology matures slower speeds could dramatically reduce the risk of pedestrian fatalities due to a test platform or deployed system malfunction. Maybe that's a good idea, and we ought to encourage companies who take that path now and in the future as the technology continues to mature.

The "above 25 mph" paragraph was added in response to social media comments 8/9/2018. And despite that I still got comments saying that systems below 25 mph are completely different than higher speed systems. So in case that point isn't clear enough, here is more on that topic:

I'm not assuming that slow and fast systems are designed the same. Nor am I advocating for limiting AV designs only to slow speeds (unless that fits the ODD).

I'm saying when you build a high-speed capable AV, it's a good idea to initially test at below 25 mph to reduce the risk to the public for when something goes wrong. And something WILL go wrong. There is a reason there are safety drivers.

If a system is designed to work properly at speeds of 0 mph to 55 mph (say), you'd think it would work properly at 25 mph. And you could design it so that at 25 it's using most or all of the machinery that is being used at 55 mph (SW, HW, sensors, algorithms, etc.) Yes, you can get away with something simpler at low speed. But this is low speed testing, not deployment. Why go tearing around town at high speed with a system that hasn't even been proven at lower speeds? Then bump up speed once you've built confidence.

If you design to validate as much as possible at lower speeds, you lower the risk exposure. Sure, investors probably want to see max. speed operation as soon as possible. But not at the cost of dead pedestrians because testing was done in a hurry.

I'm saying when you build a high-speed capable AV, it's a good idea to initially test at below 25 mph to reduce the risk to the public for when something goes wrong. And something WILL go wrong. There is a reason there are safety drivers.

If a system is designed to work properly at speeds of 0 mph to 55 mph (say), you'd think it would work properly at 25 mph. And you could design it so that at 25 it's using most or all of the machinery that is being used at 55 mph (SW, HW, sensors, algorithms, etc.) Yes, you can get away with something simpler at low speed. But this is low speed testing, not deployment. Why go tearing around town at high speed with a system that hasn't even been proven at lower speeds? Then bump up speed once you've built confidence.

If you design to validate as much as possible at lower speeds, you lower the risk exposure. Sure, investors probably want to see max. speed operation as soon as possible. But not at the cost of dead pedestrians because testing was done in a hurry.

Notes for those who like details:

The impact speed is potentially different than vehicle speed. If the vehicle has time to shed even 5 or 10 mph of speed at the last second before impact that certainly helps, potentially a lot, even if the vehicle does not come to a complete stop before impact. But a slower vehicle is less dependent upon that last second braking (human or automated) working properly in a crisis.

The actual risk will depend upon circumstances. For example, since the 1991 data shown it seems likely that emergency medical services have improved, reducing fatality rates. On the other hand, increasing prevalence of SUVs might increase fatality rates depending upon impact geometries. And so on. A study that compares multiple data sets is here:

https://nacto.org/docs/usdg/relationship_between_speed_risk_fatal_injury_pedestrians_and_car_occupants_richards.pdf

But, all that aside, all the data I've seen shows that traditional city speed limits (25 mph or less) help with reducing pedestrian fatalities.

Subscribe to:

Comments (Atom)